Open Topics for Student Projects

Below we have a collection of proposed topics for Bachelor or Master thesises and/or for lab courseworks. All students are also invited to contact us with their own topic ideas.

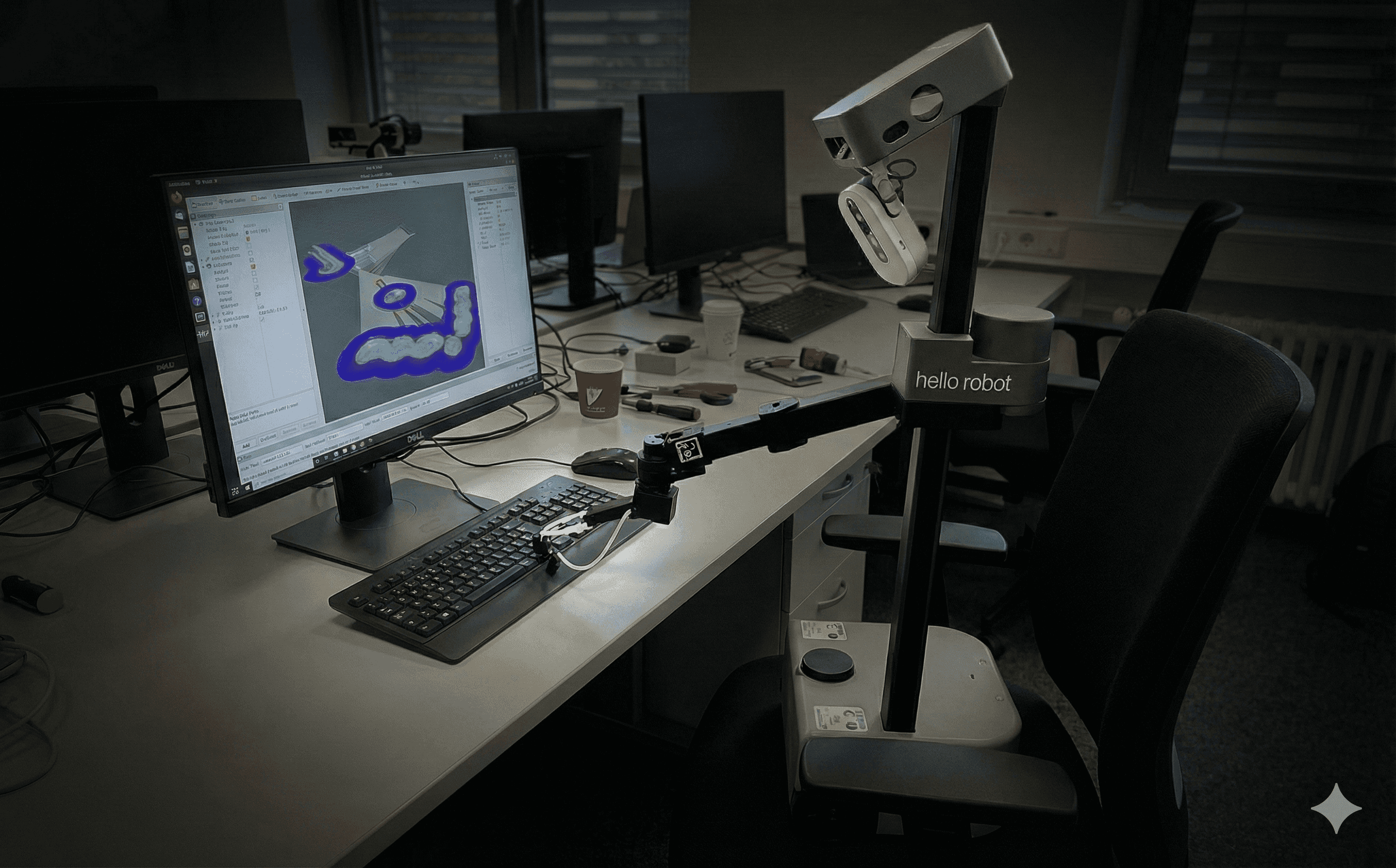

Robot Exploration that Includes Storage Furniture

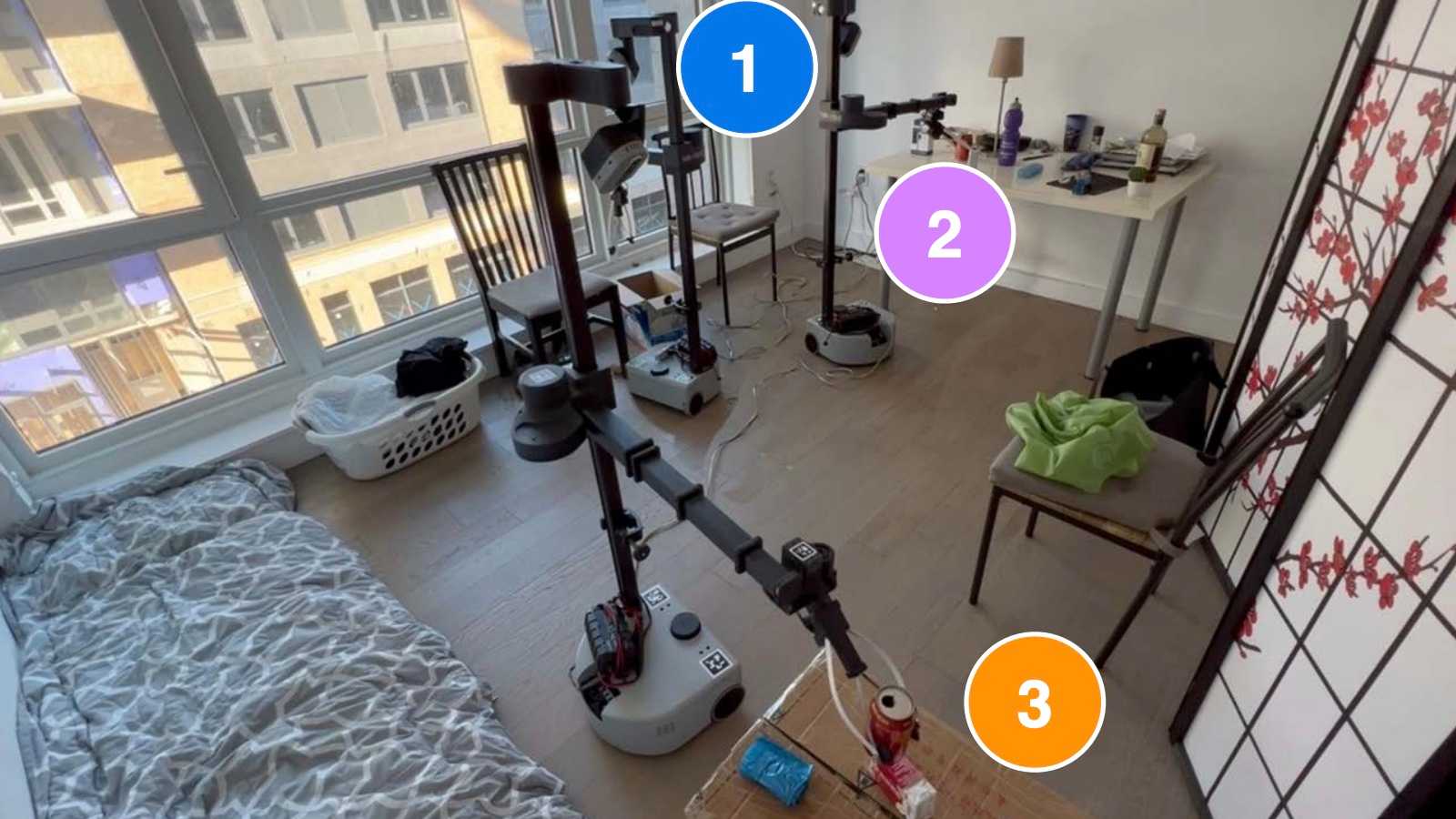

Object search is the problem of letting a robot find an object of interest. For this, the robot has to explore the environment it is placed into until the object is found. To explore an environment, current robotic methods use geometrical sensing, i.e. stereo cameras, LiDAR sensors or similar, such that they can create a 3D reconstruction of the environment which also has a clear distinction of 'known & occupied', 'known & unoccupied' and 'unknown' regions of space.

The problem of the classic geometric sensing approach is that it has no knowledge of e.g. doors, drawers, or other functional and dynamic elements. These however are easy to detect from images. We therefore want to extend prior object search methods such as https://naoki.io/portfolio/vlfm with an algorithm that can also search through drawers and cabinets. The project will require you to train your own detector network to detect possible locations of an object, and then implement a robot planning algorithm that explores all the detected locations.

Requirements

experience with python, pytorch, ideally with open3d

Contact

please send your CV and transcript to blumh@uni-bonn.de

Continual Test-Time-Optimization for Vision Foundation Models

Robots have access to large amounts of data that they can collect from their deployment environments. We want to tap into this resource to optimize foundation models such as DINO to work optimally in these deployment environments, and to leverage the scale of long-term deployment to improve them for downstream applications such as object identification and tracking.

Requirements

Experience with pytorch and python

Contact

Please send your CV and transcript to blumh@uni-bonn.de

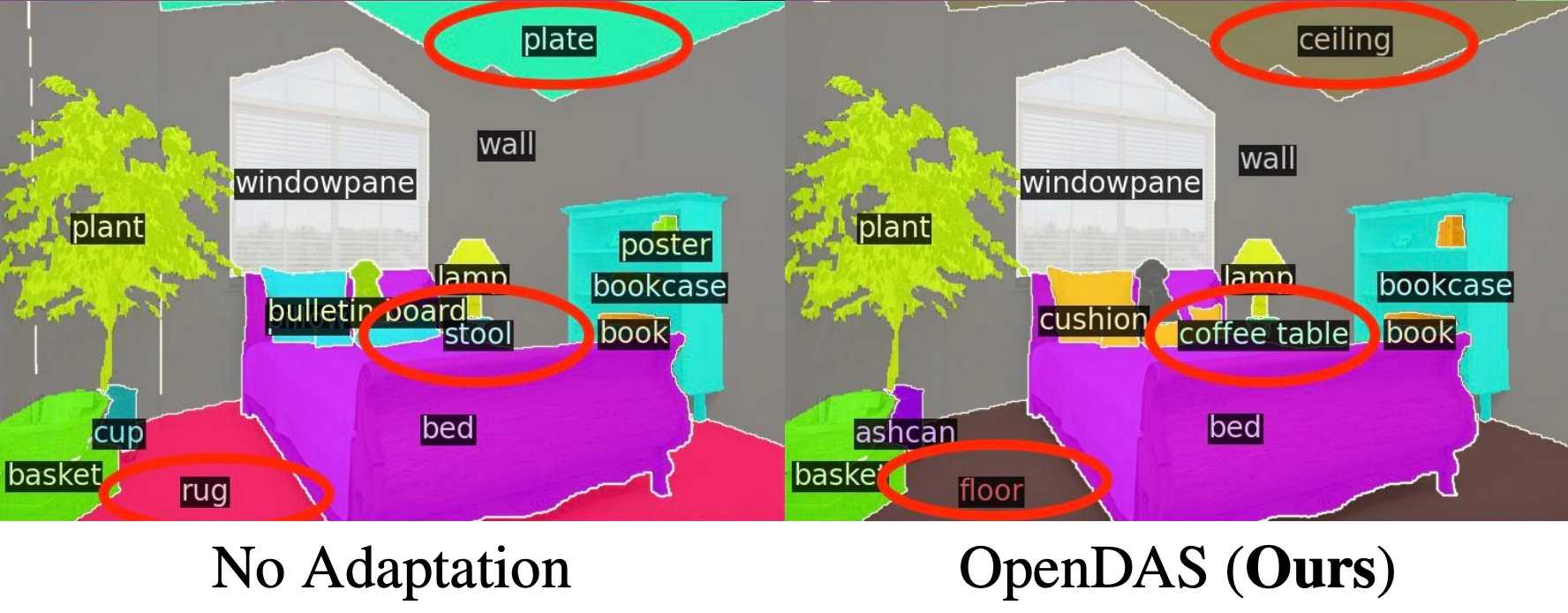

Self-supervised Adaptation for Open-Vocabulary Segmentation

Multimodal Large Language Models (MLLM) have pushed the applicability of scene understanding in robotics to new limits. They allow to directly link natural language instructions to robotic scene understanding. Sometimes however, MLLMs trained on internet data have troubles to understand more domain-specific language queries, such as "bring me the 9er wrench" or "pick all the plants that are not beta vulgaris". This project builds up on prior work that developed a mechanism to adapt open-vocabulary methods to new words and visual appearances (OpenDAS). Currently, the method is impractical as it requires a lot of densely annotated images from the target domain. We want to develop mechanisms that allow to do such adaptation in a self-supervised way, e.g. by letting the robot look at the same object from multiple viewpoints and enforcing consistency of representation.

Requirements

Experience with python and pytorch.

Contact

Please send your CV and transcript to blumh@uni-bonn.de

VLMs as roboticists

As VLMs grow more capable, robotics research has focused primarily on direct applications, e.g. VLAs and spatial reasoning. However, VLMs are now proficient at 'computer use' and coding, interacting with standard interfaces like web browsers. We propose to leverage this capability for robotics by enabling VLMs to use the tools roboticists use daily, such as RViz for navigation.

Growing Scene Graphs from Observations

As you walk around in a room with smart glasses on, or as a robot explores a room, we build a growing representation of all observed objects. As soon as the robot or human interacts with an object, we update its pose accordingly. If a door is opened or a drawer pulled out, we update the observed dimensions and add the objects inside as leafs to the drawer node. Over time in this way, we grow a complete, dynamic representation of a scene.

Requirements

experience with a python deep learning framework, understanding of 3D scene and camera geometry.

Contact

please send your CV and transcript to blumh@uni-bonn.de